Tales, experiences, and thoughts on building and embracing AI — from someone who’s been writing code for 20+ years and AI-augmenting it for 2.

Automation Is Offshoring Without the Border Crossing

The Supreme Court just struck down Trump’s tariffs — but tariffs were always the wrong tool. Automation removes a company’s obligation to the society it profits from without crossing any border. That’s the version of the problem accelerating right now.

Automation Is Offshoring Without the Border Crossing — The Full Argument

Tariffs don’t bring jobs back — they make domestic automation more attractive than hiring. The full argument: why reshoring and robot replacement point the same direction, what the robot tax gets wrong, and what a real framework might look like.

The Cost of Software Is Falling. What Gets Built Next Is the Interesting Part.

The cost of software production is collapsing. But the outcome isn’t less software — it’s more ambitious software, built by smaller teams. The trillion-dollar one-person company is real. The question is whether you have the judgment to lead it.

When Your AI Tool Goes Dark: 7 Hours Without Gemini Image Generation

Gemini image generation went down at 2AM and is still down at 9:22AM—and counting. For engineering leaders building AI-augmented workflows, a multi-hour AI service outage isn’t just annoying—it’s a business continuity gap. Here’s how async design, LiteLLM/OpenRouter fallbacks, and local models close it.

What Can We Actually Do About It?

Part 3 of a conversation with Claude Opus 4.6. After mapping the scenarios and the odds, I pushed for something more actionable: what actually moves the needle?

Utopia, Dystopia, or the Muddle: What Are the Actual Odds?

Part 2 of a conversation with Claude Opus 4.6. We got past the developer questions and into the bigger ones: UBI, blue collar automation, and what odds the AI gives us of coming out okay.

When Everyone Can Build Software, What's Left to Sell?

I asked Claude Opus 4.6 what happens when building software costs nothing. The answer was more honest — and more uncomfortable — than I expected.

Why Vendor Reliability Matters More in AI-Augmented Development

When the Stack Stops It’s 2:00 PM on February 9th, 2026. I’m in flow—code is shipping, tests are green, the AI assistant is humming along. Then: nothing. I can’t push or pull code—the entire AI-augmented development workflow is frozen. By 2:30 PM, service is restored. Thirty minutes of downtime. But here’s the thing: this isn’t really about GitHub. It’s about how AI-augmented development changes the calculus of vendor reliability. When your workflow depends on AI assistance maintaining context across your entire codebase, vendor downtime isn’t just inconvenient—it disrupts the exponential productivity gains that make AI development transformative. ...

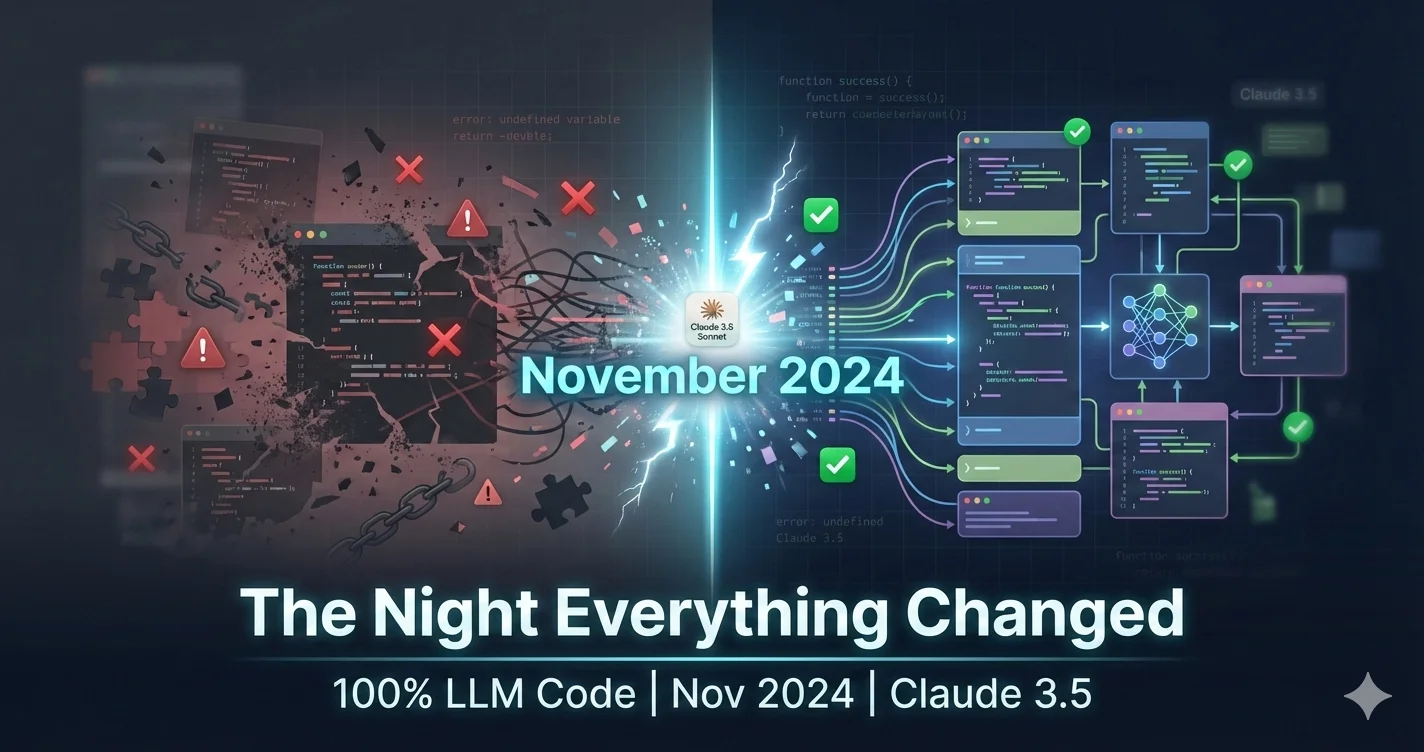

The Night Claude 3.5 Changed Everything: 100% LLM Code Story

After 2 failed attempts in early 2024, Claude 3.5 Sonnet (October 2024) finally had the instruction-following capability needed. Built a production deployment orchestrator in 3 days that saved weeks per use.

Why AI Needs Human Validation—And Eventually, Artificial DNA

TL;DR: When humans validate AI output, diverse perspectives catch diverse errors. When AIs validate each other, they converge—because similar training produces similar weights, which produces similar reasoning. Temperature adds surface-level noise, not new capabilities. Genuine novelty requires evolutionary mutation: artificial DNA. Expert vs. Researcher: Two Modes of Validation I recently published a two-part series on space-based AI infrastructure . I’m not an aerospace engineer—I’m a software developer. That distinction defines how I validate AI output. ...