When Your AI Tool Goes Dark: 7 Hours Without Gemini Image Generation

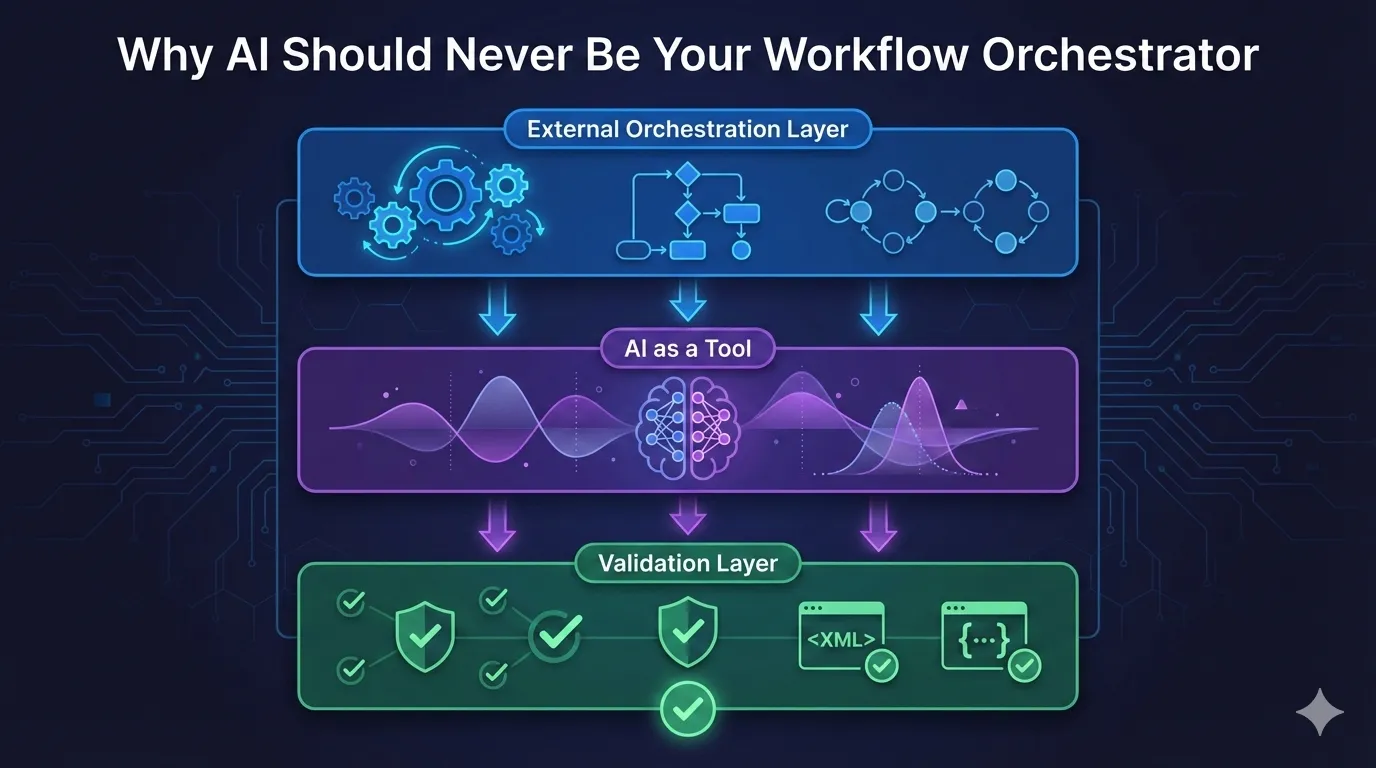

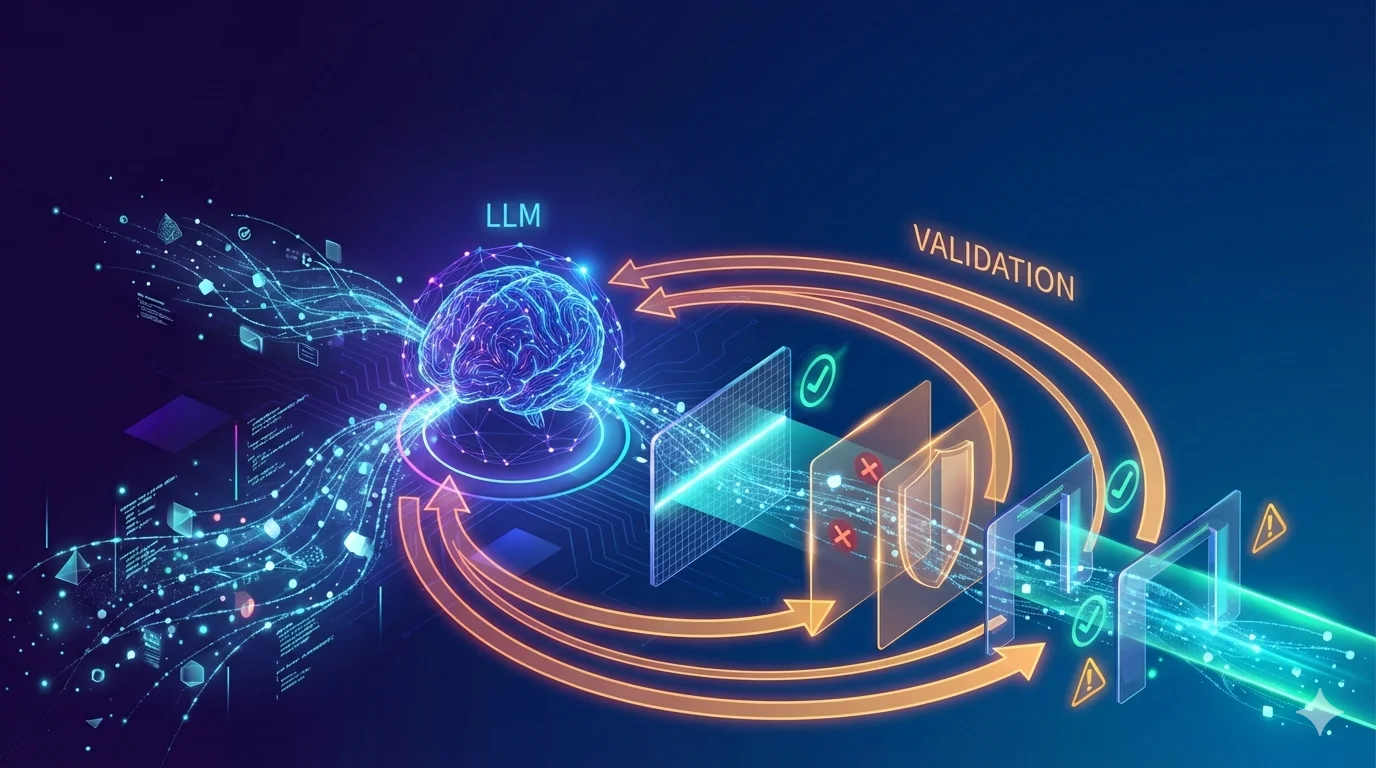

Gemini image generation went down at 2AM and is still down at 9:22AM—and counting. For engineering leaders building AI-augmented workflows, a multi-hour AI service outage isn’t just annoying—it’s a business continuity gap. Here’s how async design, LiteLLM/OpenRouter fallbacks, and local models close it.