A Simple Guide to Neural Networks, Thinking, and Creativity

The Big Question

Can machines think like humans? It’s a question people have asked for decades, and with the explosion of AI like ChatGPT and Claude, it’s more relevant than ever. To understand this, we first need to know how both human brains and AI actually work.

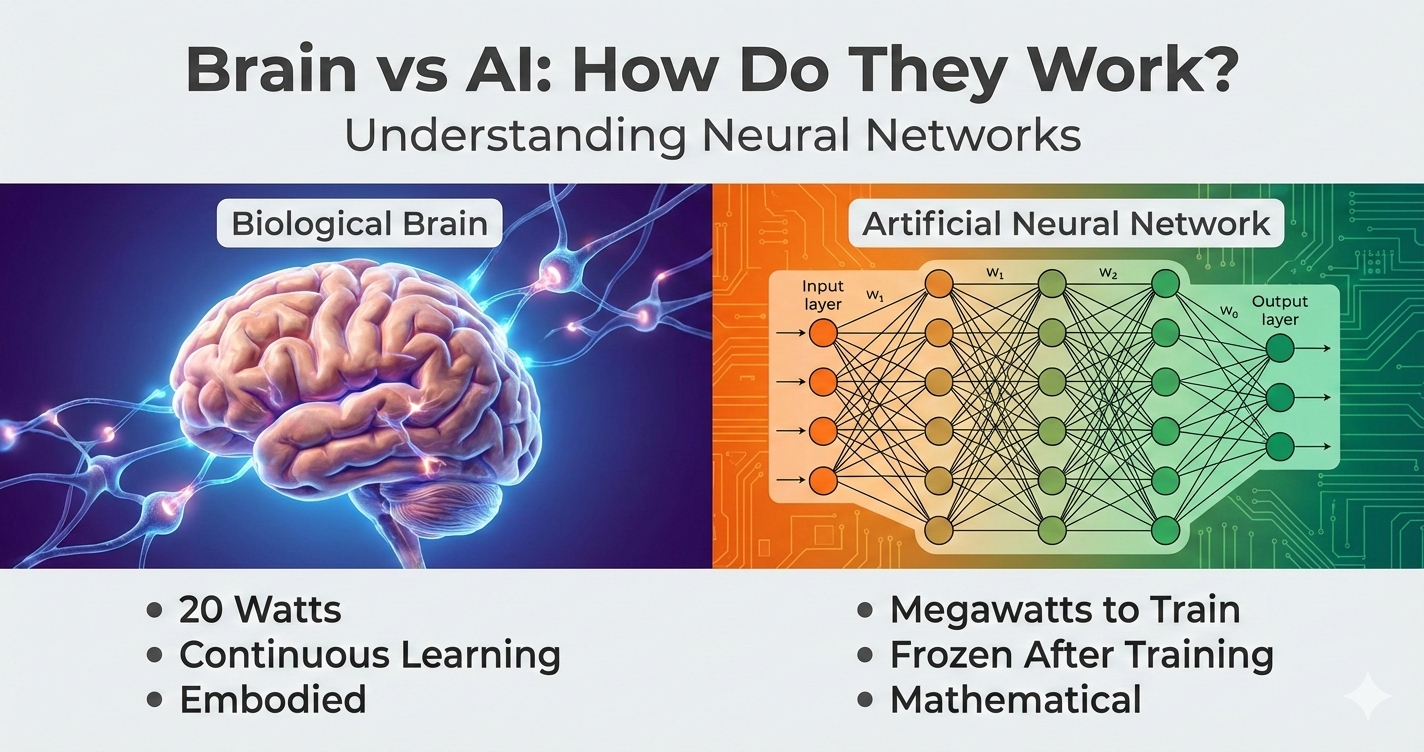

The surprising answer: Both are “neural networks” - systems of connected units that learn from experience. Your brain runs on just 20 watts (a dim light bulb), while training AI can consume enough electricity to power 20,000 homes. The similarities are fascinating, but the differences are even more revealing about what makes us human.

What Is a Neuron?

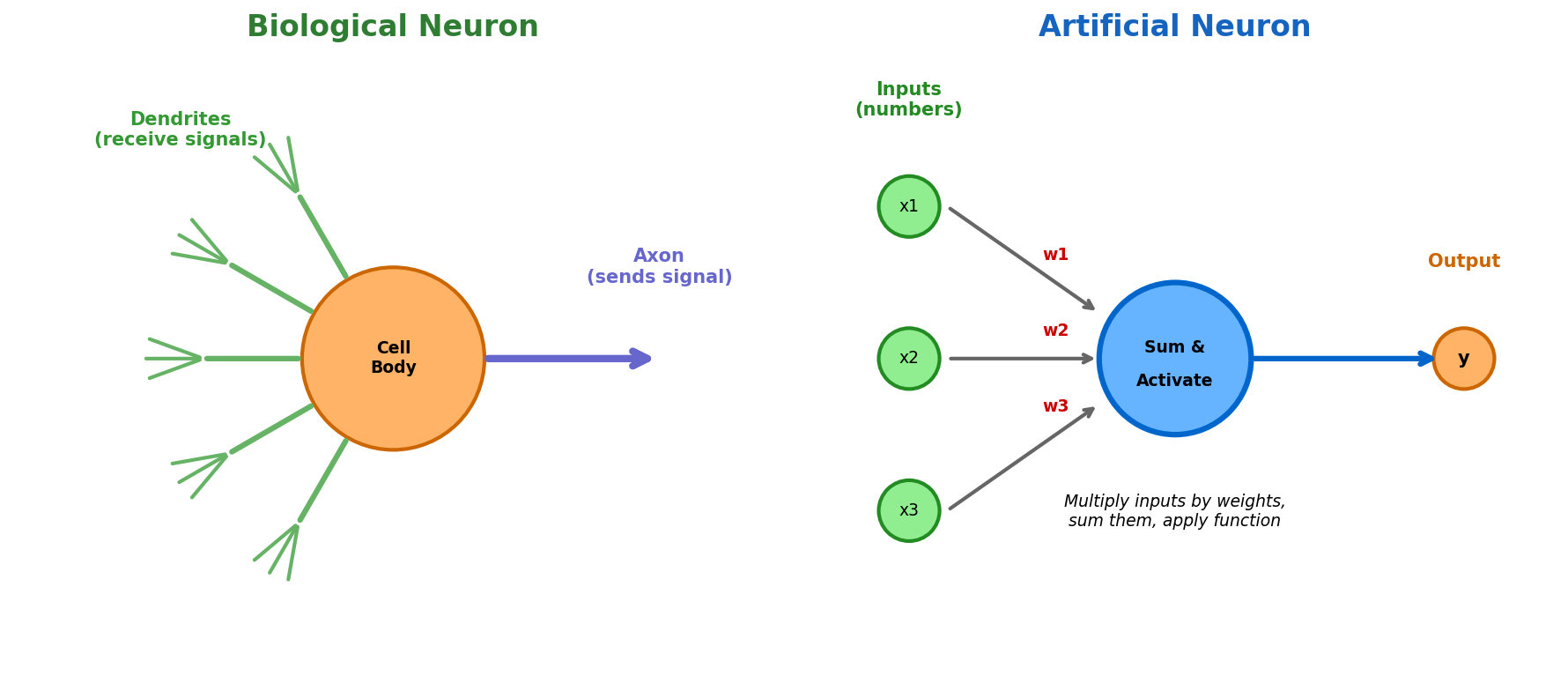

Think of neurons as tiny messengers. They receive information, process it, and pass it along. Both your brain and AI use neurons, but they work very differently:

Biological neurons have dendrites and axons; artificial neurons use mathematical operations

Biological neurons have dendrites and axons; artificial neurons use mathematical operations

Real-World Example: Recognizing a Cat

Your Brain: You see a cat. Light hits your eyes, triggering millions of neurons. Some detect edges, some detect fur texture, some recognize the shape. They all work together - and you think “cat!” in milliseconds. You also feel something (maybe “cute!”).

AI: The image becomes numbers. Artificial neurons multiply those numbers by “weights” and pass them through layers. Eventually, the system outputs: “95% confident this is a cat.” No feeling involved - just math.

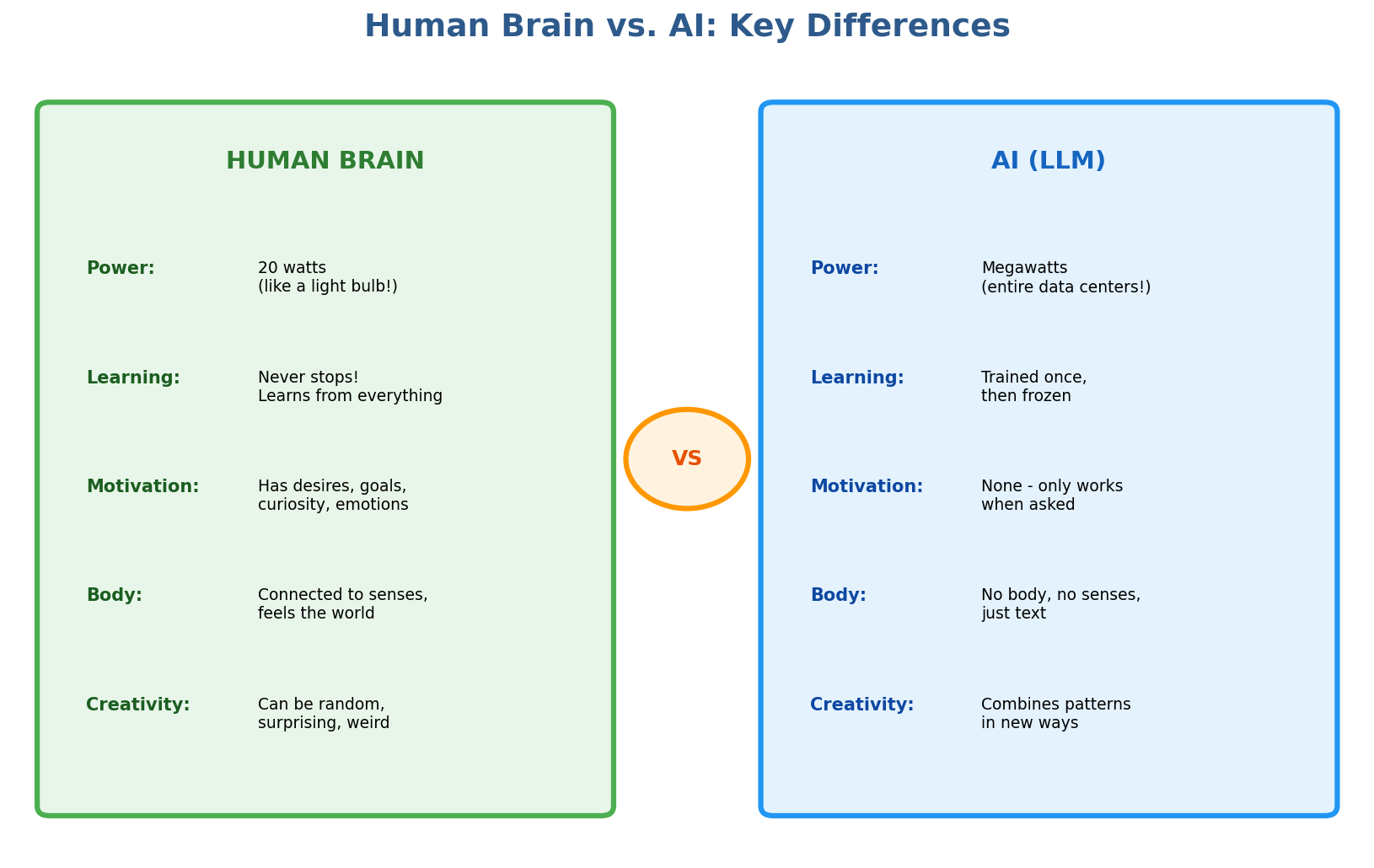

The Key Differences

While both are called “neural networks,” they’re quite different under the hood:

Comparing power consumption, learning capabilities, motivation, embodiment, and creativity

Comparing power consumption, learning capabilities, motivation, embodiment, and creativity

The Power Difference Is Mind-Blowing!

Your brain runs on about 20 watts - the same as a dim light bulb. Training a large AI model can use 20-25 megawatts continuously for months - enough to power 20,000 homes. Your brain is incredibly efficient!

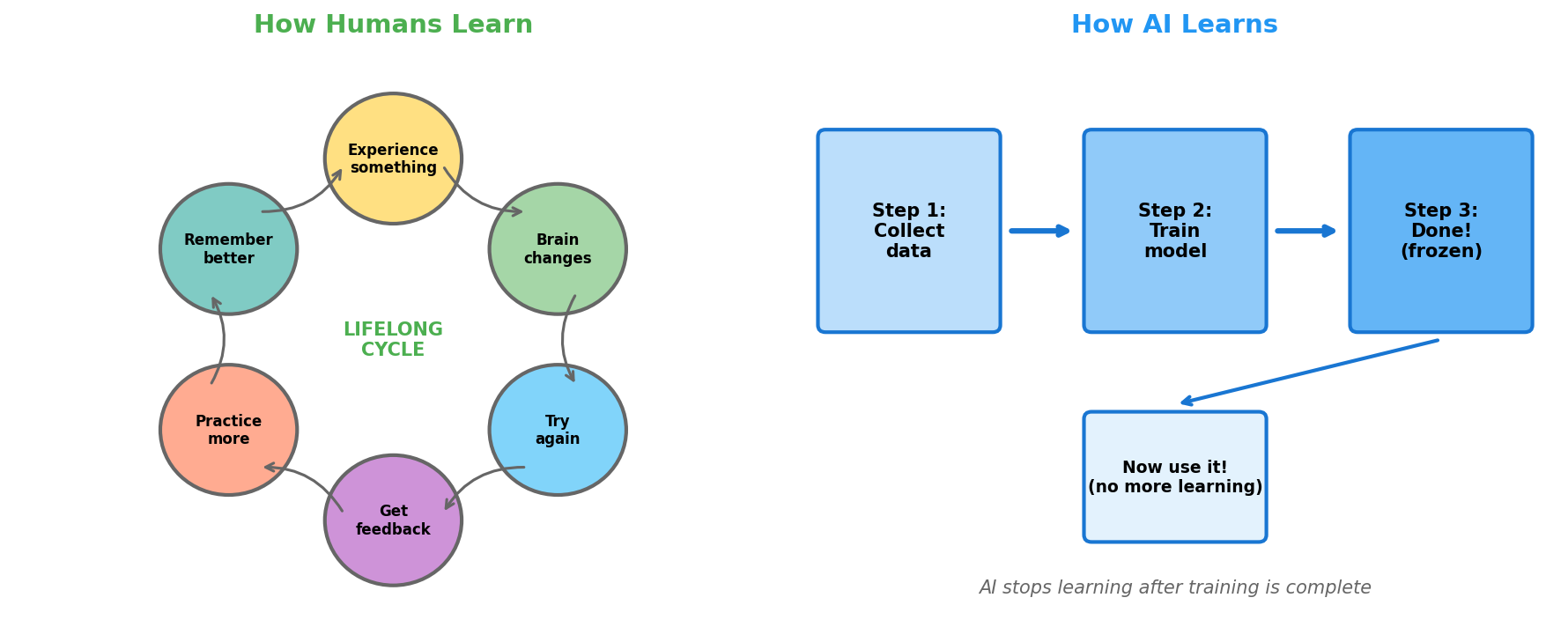

How Learning Works

One of the biggest differences is HOW these systems learn:

Humans learn continuously throughout life; AI models are trained once and then frozen

Humans learn continuously throughout life; AI models are trained once and then frozen

Example: Learning to Ride a Bike

You: You fall, your brain adjusts. You try again, get a little better. Over days/weeks, your neurons form stronger connections. Years later, you still remember - your brain keeps updating!

AI: AI would need millions of examples of bike-riding videos during training. Once trained, it can’t learn from new experiences. It’s “frozen” until someone retrains it with new data.

Can AI Be Creative?

This is the really interesting question! First, let’s understand how YOUR brain creates new ideas:

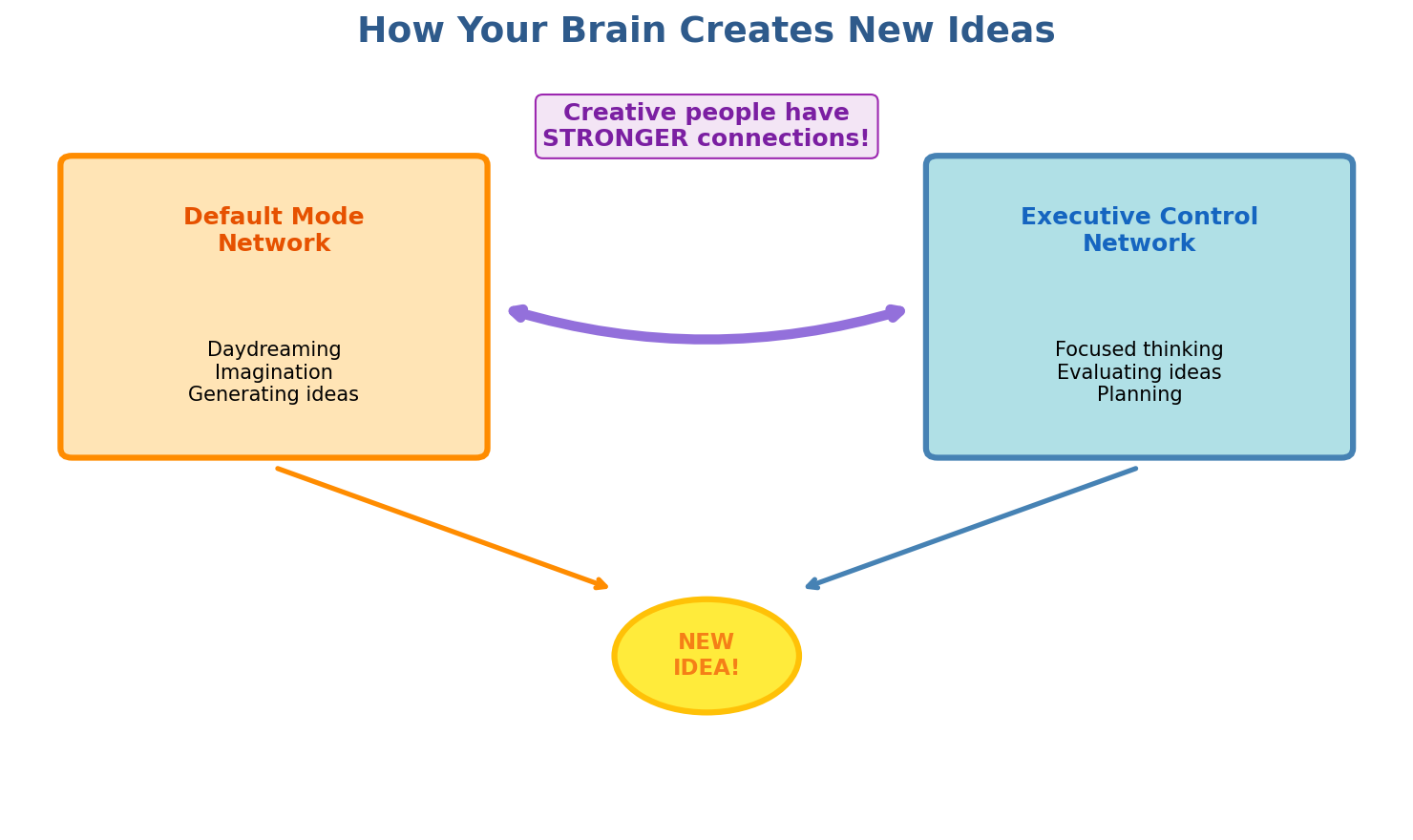

Creative thinking emerges from the interaction between the Default Mode Network and Executive Control Network

Creative thinking emerges from the interaction between the Default Mode Network and Executive Control Network

Research shows creative thinking happens when two brain networks work together: one for daydreaming and generating wild ideas, another for evaluating and refining them. Highly creative people have STRONGER connections between these networks!

So What About AI Creativity?

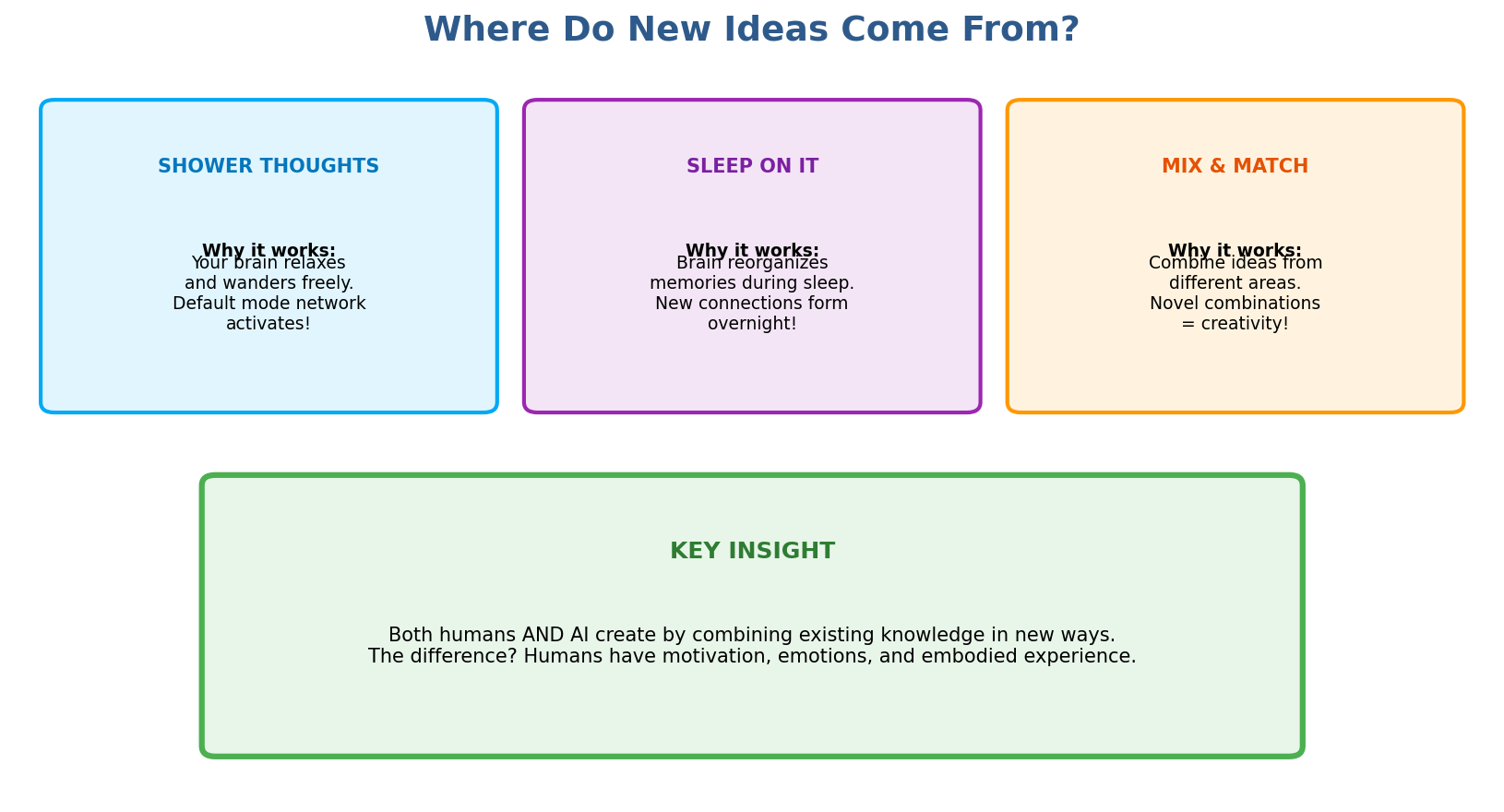

Shower thoughts, sleeping on it, and mixing ideas from different areas all contribute to creativity

Shower thoughts, sleeping on it, and mixing ideas from different areas all contribute to creativity

The surprising truth: AI CAN produce creative outputs! It combines patterns in new ways, just like humans combine existing knowledge. Studies show AI can write stories and solve problems that humans rate as “creative.”

But there’s a catch: AI has no motivation. It doesn’t WANT to create. It doesn’t have that 3am itch to solve a problem. It only works when you ask it to.

Another issue: When everyone uses AI for ideas, individual creativity goes up, but collective diversity goes down. We might all end up with similar ideas!

Are LLMs Becoming More Like Human Thinking?

This is where things get really interesting! Modern Large Language Models (LLMs) are evolving rapidly, and in some ways, they ARE becoming more human-like:

Where LLMs Are Becoming More Human-Like

Multi-step reasoning: Newer models can break down complex problems into steps, revise their thinking, and even “catch” their own mistakes - more like how humans think through difficult problems.

Context awareness: Modern LLMs can maintain longer conversations and remember earlier parts of a discussion, similar to how you remember the flow of a conversation.

Transfer learning: Like humans applying knowledge from one domain to another, LLMs can apply patterns learned in one area to solve problems in completely different areas.

Common sense reasoning: Newer models are getting better at understanding implicit information and making reasonable assumptions - something humans do naturally but machines traditionally struggled with.

Where They Still Differ Fundamentally

No embodied experience: Humans learn by touching, moving, feeling. You know what “heavy” means because you’ve lifted things. LLMs only know words about heaviness.

No temporal continuity: You have a continuous stream of experience. LLMs only “exist” during the conversation - they don’t have ongoing internal thoughts between interactions.

No intrinsic goals: You get curious, bored, excited. You WANT things. LLMs have no desires, just patterns to match.

Learning style: You learn from single experiences. Touch a hot stove once, lesson learned! LLMs need millions of examples and can’t update from new experiences without retraining.

The Trajectory

Here’s the fascinating part: The gap IS narrowing in some areas. Models are getting better at:

- Reasoning through multiple steps

- Recognizing when they’re uncertain

- Asking clarifying questions

- Integrating information from different contexts

But the fundamental differences - embodiment, motivation, continuous learning - remain. Whether these are just engineering challenges or fundamental limitations is still hotly debated!

The key insight: LLMs are becoming better at simulating certain aspects of human thinking, especially reasoning and language use. But simulation isn’t the same as the real thing - or is it? That’s the question keeping philosophers and AI researchers up at night.

The Hard Question: Does AI Really “Think”?

Philosophers and scientists still debate this! Here are the main views:

View 1 - “It’s just math”: AI manipulates symbols without understanding them. It’s like a person following a cookbook in Chinese without knowing Chinese - they can make the dish, but don’t “understand” cooking.

View 2 - “Behavior is what matters”: If AI behaves intelligently, it IS intelligent. We can’t even prove other humans are truly conscious - we just assume it from their behavior.

View 3 - “We don’t know yet”: We don’t fully understand human consciousness, so how can we say what AI does or doesn’t have? Maybe we need new concepts entirely.

The honest answer: Nobody knows for sure. And that’s what makes it fascinating!

Frequently Asked Questions

Can AI really think like humans?

AI can simulate certain aspects of human thinking, especially reasoning and language use, but fundamental differences remain. AI lacks embodied experience (learning through physical interaction), intrinsic motivation (no desires or wants), and continuous learning from single experiences.

While modern LLMs are getting better at multi-step reasoning, recognizing uncertainty, and integrating information from different contexts, they still don’t have ongoing internal thoughts between interactions or learn from touching a hot stove once like humans do. Whether simulating thinking is the same as genuine thinking remains a hotly debated philosophical question.

How much power does a brain use compared to AI?

Your brain runs on about 20 watts - the same as a dim light bulb. That’s incredibly efficient for processing visual information, controlling movement, generating thoughts, and storing memories all at once.

In stark contrast, training a large AI model like GPT-4 consumes 20-25 megawatts continuously for months - enough electricity to power 20,000 homes. Even running AI inference (just using a trained model) requires significantly more power than your brain uses for similar cognitive tasks.

This efficiency gap highlights how much we still have to learn from biological neural networks.

Can AI be creative?

Yes, AI can produce creative outputs! Studies show AI can:

- Write stories and poetry that humans rate as creative

- Combine patterns in novel ways

- Solve problems with unexpected approaches

- Generate art and music

However, there’s a crucial difference: AI has no intrinsic motivation. It doesn’t wake up at 3am with an idea it must explore. It doesn’t feel the itch to create. It only produces creative work when prompted by humans.

Research also shows an interesting paradox: AI enhances individual creativity but reduces collective diversity. When everyone uses AI for ideas, we might all end up with similar creative outputs.

What’s the biggest difference between brains and neural networks?

The three most fundamental differences are:

1. Learning Style:

- Humans: Learn continuously throughout life from single experiences (touch hot stove once → lesson learned forever)

- AI: Needs millions of examples during training, then is “frozen” until someone retrains it with new data

2. Embodiment:

- Humans: Learn through physical interaction - you know “heavy” because you’ve lifted things

- AI: Only processes symbolic data - knows words about heaviness but never lifted anything

3. Motivation:

- Humans: Have desires, get curious, feel bored, want things

- AI: Has no intrinsic goals or wants - just pattern matching

These aren’t just technical limitations; they’re fundamental differences in how the systems operate.

Are large language models becoming more like human thinking?

In some ways, yes! Modern LLMs are evolving to be more human-like in:

- Multi-step reasoning: Breaking down complex problems and revising their thinking

- Context awareness: Maintaining longer conversations and remembering earlier discussion points

- Transfer learning: Applying knowledge from one domain to solve problems in completely different areas

- Common sense reasoning: Getting better at understanding implicit information

But fundamental differences remain:

- No embodied experience through senses and movement

- No temporal continuity of consciousness between conversations

- No intrinsic goals, curiosity, or desires

- Can’t learn from single experiences without full retraining

The gap is narrowing for certain cognitive tasks, but whether LLMs will ever have genuine understanding or just increasingly sophisticated simulation is still an open question. Research suggests experts can’t even agree on the criteria for determining if AI has a mind.

Related Reading

If you found this interesting, you might also enjoy:

- Building AI-Augmented Software - How I use LLMs for software development

- LLM Output Validation Strategies - Making AI outputs reliable in production

Want to Learn More? References

Brain Science

- Study Pinpoints Origins of Creativity in the Brain (University of Utah Health, 2024)

- Study pinpoints origins of creativity in the brain (@theU, 2024)

- Neural, genetic, and cognitive signatures of creativity (Communications Biology, 2024)

Neural Network Comparisons

- The structure dilemma in biological and artificial neural networks (Nature, 2024)

- Artificial vs. Biological Neurons: Beyond Comparison (arXiv, 2024)

AI Creativity Research

- Generative AI enhances individual creativity but reduces the collective diversity of novel content (Science Advances, 2024)

- Research: When Used Correctly, LLMs Can Unlock More Creative Ideas (Harvard Business Review, 2025)

- On the creativity of large language models (AI & Society, Springer, 2024)

Philosophy of Mind

- Why Experts Can’t Agree on Whether AI Has a Mind (TIME)

- Philosophy of artificial intelligence (Wikipedia)

Created by Claude based on human prompting and curiosity