The 2AM Problem

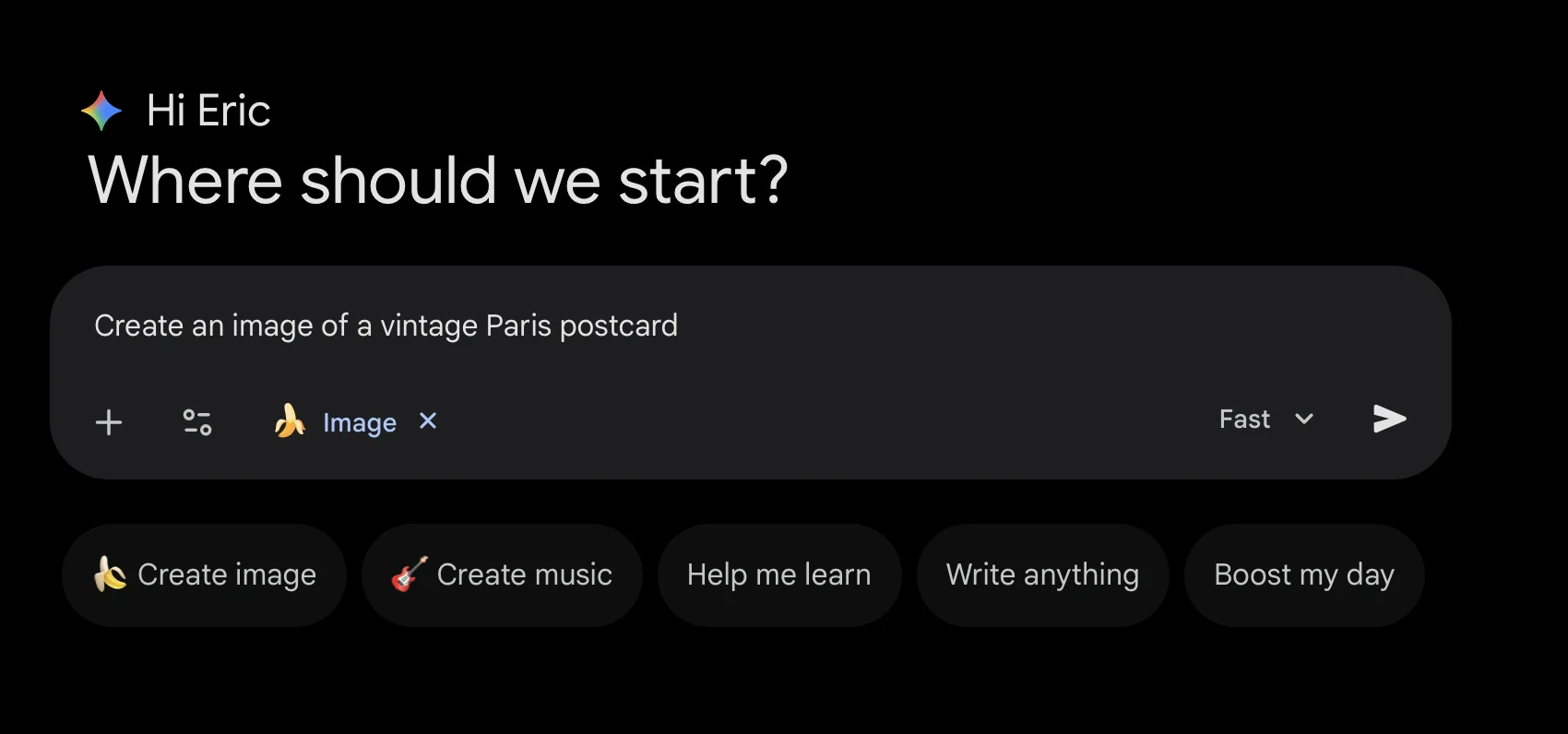

My content pipeline was ready. Post written, reviewed, frontmatter done. One step left: generate the cover image. I opened Gemini, typed the prompt, and waited.

Nothing came back. Not a bad image—nothing. I tried again. Refreshed. Checked my connection. Tried Safari, then Chrome, then an incognito window—all the same result. That ruled out a local cache or browser issue; this was server-side. Then searched “Gemini image generation down” and found I wasn’t alone. Most users won’t go through that diagnostic sequence—they’ll assume they did something wrong and stop trying, which means the real blast radius of an outage like this is wider than any status page shows. The outage had started around 2AM. Not all of Gemini—just Google’s Nano Banana image generation product. Everything else worked fine. Just the one thing I needed.

By 9:22AM EST—still down. Over seven hours and counting.

Nano Banana being down for seven hours isn’t a blip. For anyone with it in their content or development workflow, that’s a blocked morning.

This Is a Business Continuity Problem

A few months ago, GitHub went down for 30 minutes and I wrote about how AI-augmented development amplifies the cost of vendor downtime . The argument: when AI tools are in your critical path, even short outages have outsized productivity costs.

An ongoing outage that started at 2AM and is still unresolved past 9AM is a different category of problem.

The question for engineering leaders isn’t “was this outage acceptable?"—it’s “what does your workflow do when a core AI service is unavailable for half a business day?”

If the answer is “it stops,” that’s a single point of failure you need to address now, before it becomes a customer-facing incident or a missed deadline.

Three Things to Do About It

1. Design Async Workflows

The most immediate fix: stop designing workflows that block synchronously on AI service availability.

If image generation is part of your content or build pipeline, the pipeline should queue the request and complete when the service returns—not halt and wait for manual intervention. Design for “eventually consistent” AI operations. A job that retries gracefully when the service recovers is far better than a pipeline that silently stalls at 2AM and requires human triage.

Blocking pipeline (what most teams have):

| |

Async pipeline (what to build):

| |

2. Know What Your Fallback Actually Requires

When Gemini image generation goes down, the instinct is to manually switch providers. That works once. It doesn’t scale. But before reaching for a technical routing solution, there’s a more important question: does this output require human judgment to validate?

The real axis isn’t text vs images. It’s:

- Programmatically verifiable outputs — code with passing tests, structured data against a schema, API responses with assertions. Here, automatic fallback is safer—the output either passes validation or it doesn’t. Though this assumes your tests have sufficient coverage and haven’t been written to accommodate the AI’s output rather than verify correctness. That’s its own validation problem .

- Outputs requiring human judgment — images, blog copy, marketing content, anything creative. Here, blindly accepting what the AI gives you is vibe coding, whether the output is text or an image.

For the first category, LiteLLM and OpenRouter largely solve the routing problem. LiteLLM supports fallbacks across text models and image generation providers—OpenAI (DALL-E 3), Google AI Studio (Imagen), AWS Bedrock (Stable Diffusion). For programmatically validated outputs, this is a config change, not a build.

For the second category—which includes image generation and any creative text—the fallback isn’t infrastructure, it’s workflow design. The pattern that actually works is queue, generate, review:

- Submit the prompt to multiple providers simultaneously

- Cache all outputs with metadata (provider, cost, latency)

- Review and select when you’re ready

That’s async design plus multi-provider plus human judgment. It keeps your pipeline unblocked without bypassing the validation that creative output requires. As AI outputs converge across providers , the review step becomes less about catching errors and more about taste—which is harder to automate, not easier. The tooling to make this seamless doesn’t really exist yet—which is itself a gap worth watching.

3. Keep Local Models Available for Blocking Workflows

For AI tasks that are truly synchronous and blocking—code generation, summarization, validation—a local model via Ollama or LM Studio means a service outage doesn’t stop development entirely. Local models aren’t as capable as frontier APIs, but they’re available 100% of the time.

Image generation locally requires serious GPU resources, which isn’t realistic for most teams. But text-based AI tasks can be handled locally as a fallback, keeping the rest of the pipeline moving even when cloud services are unavailable.

Does Google Write the Test?

There’s a practice I’ve shared with colleagues: when a bug occurs, write a test to make sure it never happens again. The bug is the spec. The test is the contract.

The question worth asking about this outage is whether Google follows the same discipline at the infrastructure level. A 7-hour image generation outage means something slipped past detection—either the monitoring didn’t catch it fast enough, the alert didn’t escalate, or the recovery path wasn’t automated. Whatever the root cause, the engineering response should be the same as any bug fix: write the test. Instrument the failure mode. Make it impossible to ship a regression that causes the same failure silently.

From the outside, we can’t know if they did. But the length of the outage suggests the detection and recovery loop wasn’t as tight as it should be for a service with this level of adoption.

This applies to your own AI-augmented workflows too. When a service outage blocks you, treat it like a bug: document the failure, and add a “test”—a health check, a fallback trigger, a synthetic monitor that would have caught it earlier. A 7-hour outage that triggers a runbook change is survivable. A 7-hour outage that gets shrugged off as “vendors go down sometimes” is a gap waiting to reopen.

The Bar Has Risen

GitHub. Gemini image generation. These aren’t isolated incidents—they’re symptoms of a structural reality: AI tools are becoming load-bearing infrastructure in development and content workflows, and their reliability requirements need to match their criticality.

The compound dependency math from the vendor reliability post still applies: string together enough services at 99.9% uptime and your effective availability drops fast. Add a single provider having a 7-hour outage and the math gets worse.

The question isn’t whether your AI provider will go down. It’s whether your workflow can handle it when they do.

Build the fallbacks before you need them. Because at 2AM, you won’t have time to architect them from scratch.

The next outage isn’t a question of if. The only question is whether you’ll have written the test before it happens.

This post was written by Claude Code (Sonnet 4.6) with guidance and vision from Eric Gulatee. The irony of writing about AI service reliability while the AI image generation service was down was not lost on either of us.